Twitter A11y

Goal: Make visual content on Twitter more accessible

Scope: Accessibility Design Class at NYU for Master’s degree

Time Frame: 5 weeks, Spring 2022

Role: UX Researcher, UI Designer

Tools: Figma, Mural

This project was through NYU's Looking Forward class taught by Regine Gilbert and Gus Chalkias. It was a collaboration between students and the Twitter Accessibility team as a joint effort to come up with creative solutions to make visual content more accessible on the Twitter app.

Background and Problem Space

"Media on Twitter is predominately visual—such as data visualizations, emoji, picture stickers, and ASCII art—presenting challenges to users who have cognitive, learning, or sensory disabilities. We (Twitter) want to explore how other sensory modalities, like sound and haptics, can make these media types more accessible."

Deliverable: Create a prototype that explores a new creative concept for how visual content on Twitter can be made more accessible for the low vision and blind community.

We then created a user journey, and two personas to capture different user needs and goals of Twitter users.

Design Process

To brainstorm ideas, we created 4 different sketches that we eventually merged into the final designs.

The first 2 sketches were inspired by one of our guest speakers in this class Nefertiti Matos, who is a voice-over artist who narrates movie trailers. In a guest talk during our class, she spoke about how some users prefer shorter descriptions while others prefer extended descriptions.

Research

We started our qualitative research by taking a look at articles and academic research done on making visual content more accessible. From this research, we gathered that alt-text libraries were found to be beneficial for the discoverability of content. Despite these findings, we identified a gap in the literature, particularly when it comes to translating visual humor and understanding context. So even though a meme had alt-text, it usually did not give much contextual information. Reddit and Youtube are where we found the most feedback on the inaccessibility of memes and we repeatedly found that users prefer memes being explained to them in a conversational manner. In addition to this, we also discovered a podcast called Say my Meme, which describes memes for blind and visually impaired people.

Solution #1

This solution allows users to change their preferences for memes to be read out in Short alt-text or Extended Alt-Text in their settings.

Solution #2

Solution #3

Solution #4

This solution allows users to quickly alternate between short alt-text and longer alt-text. It also allows the user to listen to a user-generated voice note to portray intonation and give a more personal description of the meme.

This solution requires users to write out a descriptive explanation for the meme when they are applying alt-test. It allows users to quickly alternate between short alt text and longer alt text.

This solution takes users directly to the knowyourmeme.com website. This would result in the user having to exit Twitter and open another website to get the description AND listen to the alt-text when they return to get the context. It also assumes that knowyourmeme.com is accessible.

Final Design

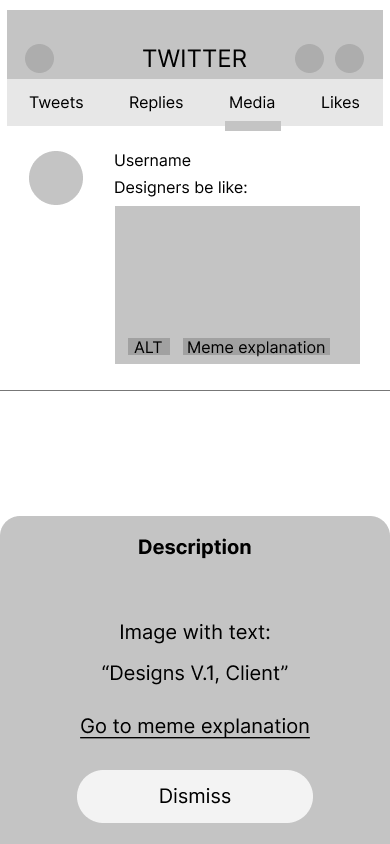

Our solution targets the existing alt-text feature in the Twitter app by adding a tab bar where users can navigate between regular alt-text, a description from www.knowyourmeme.com and lastly an audio description. Through the audio description twitter users can explain the meme by adding a personal touch, intonation, and humor similar to that of a voice note.

Reflection

This project was a great opportunity to think outside of the box when it comes to translating visual humor in an effort to make content more accessible on Twitter. It was a learning experience and with the great guidance of the Twitter Accessibility team, we were able to craft a creative solution that not only is technically feasible and built on existing features that are already available through the application.

For future work on this suggested feature, we think it would be of great importance to do more research on how this solution could benefit deaf-blind users too. It could be interesting to see if the knowyourmeme.com description could also be available on a braille display.

Given our time limit, we were not able to do any formal research in person with targeted users, but it would definitely be of high priority if we were to move on with this. This also goes for performing proper concept and usability testing.

Lastly, we would also suggest considering how this solution could be scalable, particularly when it comes to languages and thinking of other popular visual graphics like gifs, animations, and emojis.